本地部署 AI作画工具 stable-diffusion-webui 多种模型 可生成NSFW

AI作画最近非常火热啊,本文是在使用N卡的电脑上,本地部署stable-diffusion-webui前端+替换默认的模型,实现生成高质量的二次元图像,并且可以不受限制的生成图片(在线版一般会阻止NSFW内容生成)。

- AI绘画系列文章之一:本地部署 AI作画工具 stable diffusion webui 多种模型 可生成NSFW

- AI绘画系列文章之二:AI绘画指南 stable diffusion webui (SD webui)如何设置与使用

- AI绘画系列文章之三:AI作画工具 stable diffusion webui 一键安装工具(A1111-Web-UI-Installer)

- AI绘画系列文章之四:AI 绘画与作画 stable diffusion webui 常见模型汇总及简介

所需资源下载位置:

Git:https://git-scm.com/download

CUDA:https://developer.nvidia.com/cuda-toolkit-archive

Python3.10.6:https://www.python.org/downloads/release/python-3106/

waifu-diffusion:https://huggingface.co/hakurei/waifu-diffusion

waifu-diffusion-v1-3:https://huggingface.co/hakurei/waifu-diffusion-v1-3

stable-diffusion-v-1-4-original: https://huggingface.co/CompVis/stable-diffusion-v-1-4-original

stable-diffusion-v-1-5: https://huggingface.co/runwayml/stable-diffusion-v1-5

stable-diffusion-webui:https://github.com/AUTOMATIC1111/stable-diffusion-webui

NovelAILeaks 4chan:https://pub-2fdef7a2969f43289c42ac5ae3412fd4.r2.dev/naifu.tar

NovelAILeaks animefull-latest:https://pub-2fdef7a2969f43289c42ac5ae3412fd4.r2.dev/animefull-latest.tar

文章目录

开始

首先请确定你有基本的理解能力和动手能力,基本的网络搜索检索信息的能力。过于基础的东西比如“什么是命令行”,“如何下载文件”,“magnet是什么东西”,“满屏英语看不懂”,“怎么给Python,Git加上魔法上网”等等这些过于基础的问题,我这里无法做出说明,也不会解答。

要顺利运行 stable-diffusion-webui与模型,

需要足够大的显存,最低配置4GB显存,基本配置6GB显存,推荐配置12GB显存。

当然内存也不能太小,最好大于16GB。

显存大小决定了你能生成的图片尺寸,一般而言图片尺寸越大,AI能发挥的地方越多,画面里填充的细节就越多。

GPU主频和显存位宽,则决定了你能生成的多快。

当显存不足时,只能用时间换性能,将生成时间延长4倍,甚至8~10倍来生成同样的图片。

教程部署环境为

CPU:Intel® Core™ i7-10750H

GPU:NVIDIA Quadro T2000 with Max-Q Design (显存4GB)

内存:16GB * 4

磁盘:1TB * 2 SSD

OS:win11 21H1

准备运行环境

需要准备3个或4个东西

Python 3.10.6,Git ,CUDA,这三个的下载地址在文章的最前边。

视情况,你可能还需要一个魔法上网工具(假设你的魔法上网工具代理在127.0.0.1:6808)

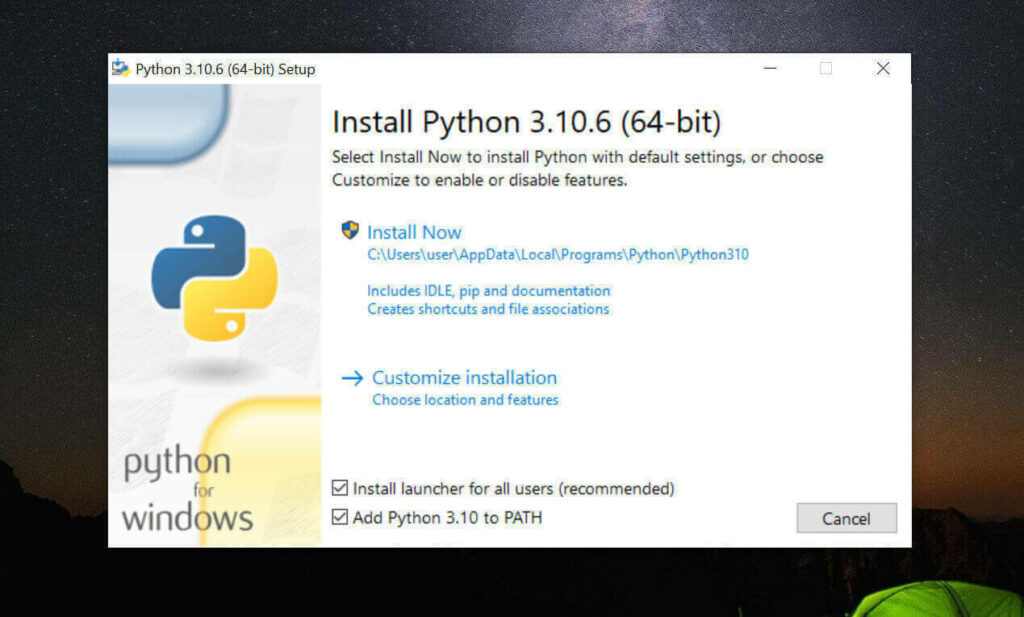

安装 Python 3.10.6 与 pip

请一定安装3.10.6版本的Python,其他版本高几率无法运行

请一定安装3.10.6版本的Python,其他版本高几率无法运行

请一定安装3.10.6版本的Python,其他版本高几率无法运行

我这里采用直接系统内安装Python 3.10.6的方式

如果你会用Miniconda,也可以用Miniconda实现Python多版本切换,具体我这里不教需要的自己琢磨。

- 访问 Python3.10.6 下载页面

-

把页面拉到底,找到【Windows installer (64-bit)】点击下载

-

安装是注意,到这一步,需要如下图这样勾选 Add Python to PATH

然后再点上边的 Install Now

python -

安装完成后,命令行里输入

Python -V,如果返回Python 3.10.6那就是成功安装了。 -

命令行里输入

python -m pip install --upgrade pip安装升级pip到最新版。

请一定安装3.10.6版本的Python,其他版本高几率无法运行

请一定安装3.10.6版本的Python,其他版本高几率无法运行

请一定安装3.10.6版本的Python,其他版本高几率无法运行

安装 Git

- 访问 Git 下载页面

-

点击【Download for Windows】,【64-bit Git for Windows Setup】点击下载

-

一路下一步安装

-

命令行运行

git --version,返回git version 2.XX.0.windows.1就是安装成功了。

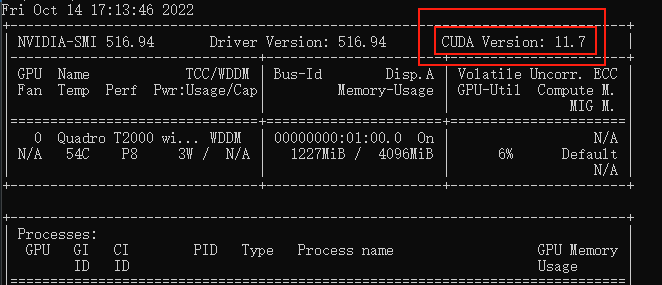

安装 CUDA (nvidia显卡用户)

-

命令行运行

nvidia-smi,看下自己显卡支持的 CUDA版本

(升级显卡驱动有可能会让你支持更高版本的 CUDA)

-

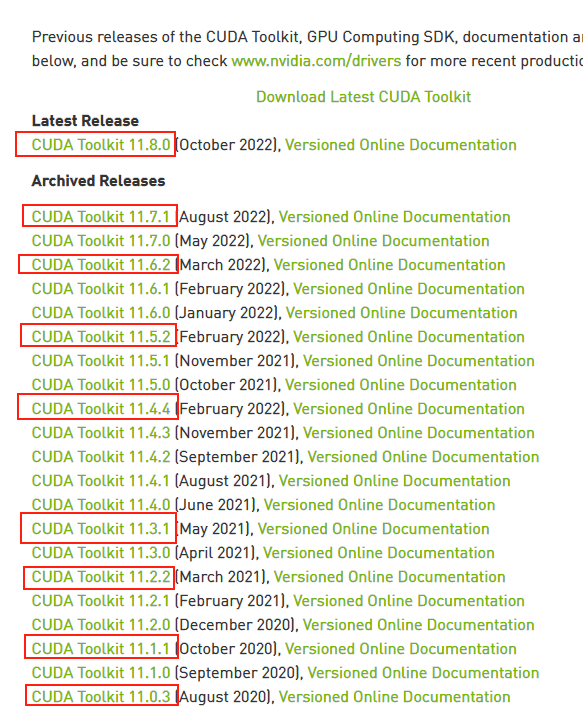

接下来前往英伟达 CUDA 官网,下载对应版本。

注意请下载,你对应的版本号最高的版本,比如我的是11.7的,那就下11.7.1(这里最后的.1意思是,11.7版本的1号升级版)

-

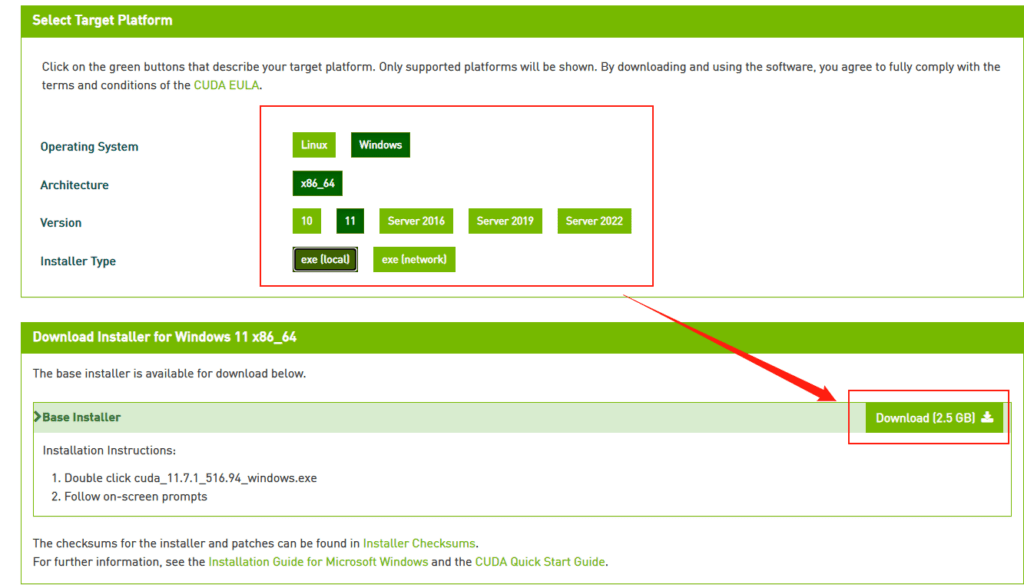

选你自己的操作系统版本,注意下个离线安装包【exe [local]】,在线安装的话,速度还是比较堪忧的。

下载stable-diffusion-webui (nvidia显卡用户)

找一个你喜欢的目录,在资源管理器,地址栏里敲CMD,敲回车,启动命令提示行窗口,输入以下命令

# 下载项目源代码

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

# 切换到项目根目录

cd stable-diffusion-webui

- 他会在你选择的目录下生成stable-diffusion-webui文件夹,放项目

- 这东西本体+虚拟环境+集中模型+增强脚本最后会很大的,目前我已经占用了快20GB了,请放到磁盘空间富裕的盘符

- 整个路径中,不要有中文(比如“C:\AI作画工具\”),也不要有空格(比如“C:\Program Files”)可以避免很多奇怪的问题。

下载stable-diffusion-webui (AMD 显卡用户)

这是AMD显卡用户的步骤,nvidia显卡用户可以略过,stable-diffusion-webui尚未正式支持AMD,目前是 lshqqytiger 大佬制作的分支版(stable-diffusion-webui-directml),Training功能AMD用户无法使用,也就是无法训练,但其他功能比如文字生成图,图生图,重绘,扩展,LoRAs等都可以正常工作。

找一个你喜欢的目录,在资源管理器,地址栏里敲CMD,敲回车,启动命令提示行窗口,输入以下命令

# 下载项目源代码并安装

git clone https://github.com/lshqqytiger/stable-diffusion-webui-directml && cd stable-diffusion-webui-directml && git submodule init && git submodule update

下载模型文件

stable-diffusion-webui只是个工具,他需要后端的训练模型来让AI参考建模。

目前比较主流的模型有

- stable-diffusion:偏真人(一般简称为 SD 模型, SDwebui 模型)

- waifu-diffusion:偏向二次元(一般简称 Waifu 模型,WD模型)

- Novel-AI-Leaks:更加偏向二次元(一般简称 Naifu 模型)

模型文件一般都比较大,请找个空间富裕的磁盘下载。

更多模型下载可以看下这个:《AI 绘画与作画 常见模型汇总及简介》

模型的大致区别

| 名称 | 需求 | 效果 | 备注 |

|---|---|---|---|

| stable-diffusion (4GB emaonly模型) | 2GB 显存起步 | 出图速度 10s,单次最大出 920×920 | 适合出图用 |

| stable-diffusion (7GB full ema模型) | 4GB 显存起步 | 带最后一次训练的权重,所以费显存 | 适合训练用 |

| waifu (Float 16 EMA 模型) | 2GB显存起步 | 与stable性能接近 ,显存占用略高 | 适合出图用 |

| waifu (Float 32 EMA 模型) | 2GB显存起步 | 与stable性能接近,显存占用略高 | 适合出图用,出图质量其实和16差别不大 |

| waifu (Float 32 Full 模型) | 4GB显存起步 | 与stable性能接近,显存占用略高 | 适合出图或训练用 |

| waifu (Float 32 Full + Optimizer 模型) | 8GB显存起步 | 与stable性能接近,显存占用略高 | 适合训练用 |

| Naifu (4GB pruned 模型) | 最低8GB显存&8GB显存 | 和官方比较接近 | 适合出图用 |

| Naifu (7GB latest模型) | 最低8GB显存(向上浮动10GB) | 和官方比较接近 | 适合出图或训练用 |

- 注意这里显存指的是512X512尺寸,默认配置下,出图时软件所需要占用的显存。2GB显存起步,意味浙你电脑显卡实际显存最少也要3GB(因为系统桌面,浏览器的显示也需要占用一部分显存)

- 通过增加各种“优化”参数,可以通过性能的部分下降换取显存占用减少。

- Nafu模型名称说明1:animefull-final-pruned = full-latest = NAI 全量模型(包含NSFW)

- Nafu模型名称说明2:animesfw-latest = NAI 基线模型

下载stable-diffusion

下载的方式有 3 个

- 官网下载:https://huggingface.co/CompVis/stable-diffusion-v-1-4-original

-

File storage:https://drive.yerf.org/wl/?id=EBfTrmcCCUAGaQBXVIj5lJmEhjoP1tgl

-

磁力链接

magnet:?xt=urn:btih:3a4a612d75ed088ea542acac52f9f45987488d1c&dn=sd-v1-4.ckpt&tr=udp%3a%2f%2ftracker.openbittorrent.com%3a6969%2fannounce&tr=udp%3a%2f%2ftracker.opentrackr.org%3a1337 -

将解压出.ckpt文件放在

\stable-diffusion-webui\models\Stable-diffusion\下

文件名可以是任何你喜欢的英文名。比如stable-diffusion .ckpt

下载 waifu-diffusion

-

官网下载:https://huggingface.co/hakurei/waifu-diffusion-v1-3/tree/main

-

下那个

wd-v1-3-float16.ckpt就行 -

将解压出.ckpt文件放在

\stable-diffusion-webui\models\Stable-diffusion\下

文件名可以是任何你喜欢的英文名.比如waifu-diffusion-16.ckpt

下载 NovelAILeaks

-

Naifu Leaks 4chan:https://pub-2fdef7a2969f43289c42ac5ae3412fd4.r2.dev/naifu.tar

-

Naifu Leaks animefull-latest:https://pub-2fdef7a2969f43289c42ac5ae3412fd4.r2.dev/animefull-latest.tar

-

找到

naifu\models\animefull-final-pruned\model.ckpt放在\stable-diffusion-webui\models\Stable-diffusion\下文件名可以是任何你喜欢的英文名,比如Naifu-Leaks- 4chan.ckpt -

找到

naifu\models\animefull-final-pruned\config.yaml放在\stable-diffusion-webui\models\Stable-diffusion\下文件名改成上边和你上边的文件同名,比如Naifu-Leaks- 4chan.yaml -

找到

naifu\modules\,把里面所有的.pt文件复制到\stable-diffusion-webui\models\hypernetworks\文件夹下,没有这个文件夹就自己新建一个。

下载其他模型

出于篇幅问题,其他常见的绘画用模型请参考另一篇文章

《AI 绘画与作画 stable diffusion webui 常见模型汇总及简介》

运行

- 双击运行

\stable-diffusion-webui\webui-user.bat -

耐心等待,脚本会自己检查依赖,会下载大约几个G的东西,解压安装到文件夹内(视网速不同,可能需要20分钟~2小时不等)无论看起来是不是半天没变化,感觉像卡住了,或者你发现电脑也没下载东西,窗口也没变化。千万不要关闭这个黑乎乎的CMD窗口,只要窗口最下方没显示类似“按任意键关闭窗口”的话,那脚本就是依然在正常运行的。

绝大部分情况下,这个阶段出现报错都是因为你的git与python访问国际互联网时遇到障碍所导致的,比如类似Couldn’t checkout commit,Recv failure: Connection was reset,Proxy URL had no scheme, should start with http:// or https://的提示。

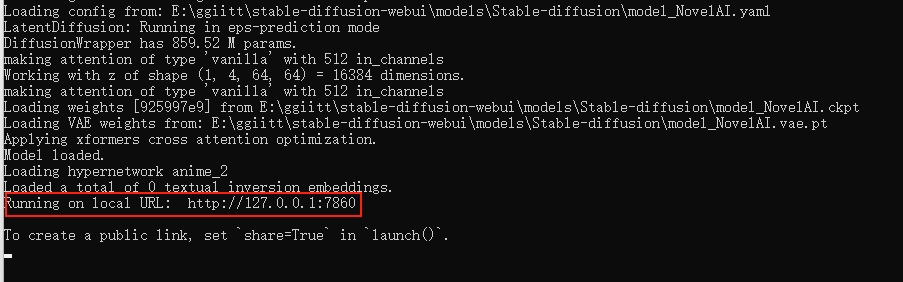

- 当你看到下图这行字的时候,就是安装成功了

-

复制到浏览器访问即可(默认是 http://127.0.0.1:7860 )(注意不要关闭这个窗口,关闭就退出了)

生成第一张AI作图

这里只是简单说一下,稍微详细一点的教程请看另一篇文章:《AI绘画指南 (SD webui)如何设置与使用》

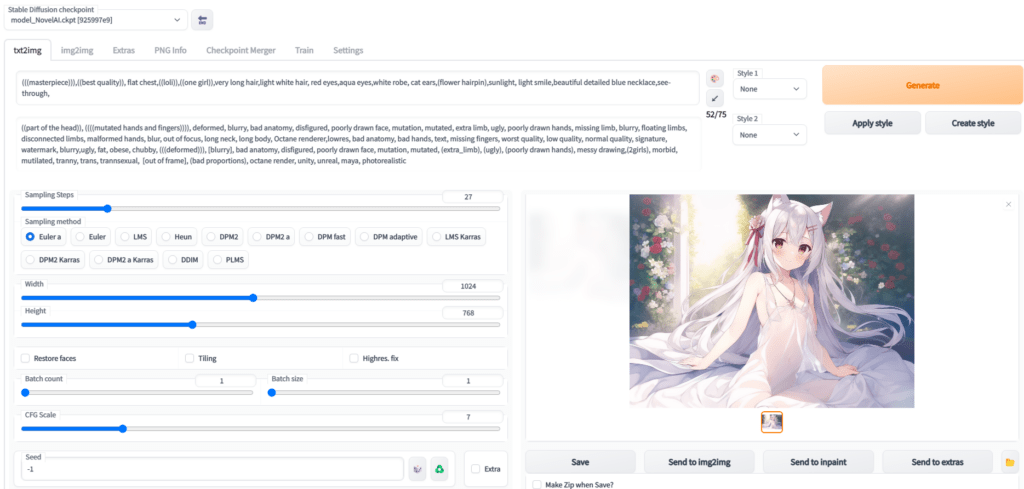

- Prompt 里填写想要的特征点

(((masterpiece))),((best quality)), flat chest,((loli)),((one girl)),very long light white hair, beautiful detailed red eyes,aqua eyes,white robe, cat ears,(flower hairpin),sunlight, light smile,blue necklace,see-through,

以上大概意思就是

杰作,最佳品质,贫乳,萝莉,1个女孩,很长的头发,淡白色头发,红色眼睛,浅绿色眼睛,白色长裙,猫耳,发夹,阳光下,淡淡的微笑,蓝色项链,透明

- Negative prompt 里填不想要的特征点

((part of the head)), ((((mutated hands and fingers)))), deformed, blurry, bad anatomy, disfigured, poorly drawn face, mutation, mutated, extra limb, ugly, poorly drawn hands, missing limb, blurry, floating limbs, disconnected limbs, malformed hands, blur, out of focus, long neck, long body, Octane renderer,lowres, bad anatomy, bad hands, text, missing fingers, worst quality, low quality, normal quality, signature, watermark, blurry,ugly, fat, obese, chubby, (((deformed))), [blurry], bad anatomy, disfigured, poorly drawn face, mutation, mutated, (extra_limb), (ugly), (poorly drawn hands), messy drawing,(2girls), morbid, mutilated, tranny, trans, trannsexual, [out of frame], (bad proportions), octane render, unity, unreal, maya, photorealistic

大概意思就是排除一些负面标签,比如奇怪的手,奇怪的肢体,减少生成奇怪图片的几率 -

Sampling Steps 你可以理解让AI推演多少步,一般来说超过17基本就能看了,步数越多,画面中的细节就越多,但需要的时间也就越久,一般20~30是一个比较稳妥的设定。这个数不会改变画面内容,只会让内容更加精细,比如20的项链就是一个心形钻石,而50的项链还是同样的心形钻石,只是钻石上会有更加复杂的线条

-

Sampling method 你可以理解成AI推演的算法,一般Euler a,Euler ,DDIM,都是不错的,任选一个就行。

-

图片分辨率 这个就是拼显卡显存的,自己调吧,低于512X512可能画面就不会有太多细节了,越大的分辨率AI能发挥的地方就越多。

-

下边是3个扩展选项,一般不需要勾选。

Restore faces:勾选后可以生成更真实的脸,第一次勾选使用时,需要先下载几个G的运行库。

Tiling:让图片可以平铺(类似瓷砖,生成的图案左右上下都可以无缝衔接上自己)

Highres. fix:超分辨率,让AI用更高的分辨率填充内容,但生成的最终尺寸还是你上边设定的尺寸。 -

生成几次,每次多少张

Batch count:是一次运行几次

Batch size: 是同时生成多少张

比如:Batch count设置为4,用时N分钟*4,生成4张图;Batch count设置为4,用时N分钟,生成4张图,但是同时需要的显存也是4倍。512X512大概需要3.75GB显存,4倍就是15GB显存了。

- CFG Scale AI有多参考你的Prompt与Negative prompt

开得越高,AI越严格按照你的设定走,但也会有越少的创意

开的越低,AI就越放飞自我,随心所欲的画。

一般7左右就行。 -

Seed 随机数种子,AI作画从原理上其实就是用一个随机的噪声图,反推回图像。但因为计算机里也没有真随机嘛,所以实际上,AI作画的起始噪声,是可以量化为一个种子数的。

-

Generate 开始干活按钮,这个就不用说了吧,点了AI就开始干活了。

-

Stable Diffusion checkpoint 在最左上角,是选择模型的,前边让你下载了三个,请根据自己需求和体验自行选择使用。

后话

-

使用NovelAILeaks模型,有一个额外的设置,请在页面中选择【settings选项卡】,把页面往下拉到底,找到setting CLIP stop at last layers(Clip 跳过层),把他设置为2

-

AI作图不是释放魔法,不是魔咒越长施法前摇越长的魔咒威力就越大。请简洁、准确、详细的描述你需要的Prompt即可。像我上边的要求就是,1个萝莉,穿白色连衣裙,瞳孔红色,长发,白色,带发卡,猫耳,微笑,阳光下。半透明材质衣服,已经WebUI是有75个词限制的。

-

如果你想生成更大尺寸的图,但是显卡显存不足

用文本编辑器打开\stable-diffusion-webui\webui-user.bat。

在COMMANDLINE_ARGS=后添加–medvram

如果还不行,改成–medvram –xformers

如果还不行,改成–medvram –opt-split-attention –xformers

如果还不行,改成–lowvram

如果还不行,改成–lowvram –xformers

如果还不行,改成–lowvram –opt-split-attention

注意这是个用生成时间换图片尺寸的事情,最极端的参数可能导致你图片生成时间是之前的好几倍。

最极端参数是个什么概念呢,比如默认配置512X512一张图是10秒内,但显存需要4G,改成最极端配置,显存仅需0.5~0.7G(和Sampling method有关),但代价是时长变成3分钟。

- 16XX系显卡,需要用文本编辑器打开\stable-diffusion-webui\webui-user.bat。

在COMMANDLINE_ARGS=后添加–precision full –no-half

类似下边这样,不然生成图会是黑块或者绿块,这是个16XX系显卡的bug

@echo off

set PYTHON=

set GIT=

set VENV_DIR=

set COMMANDLINE_ARGS=---precision full --no-half

call webui.bat

- 示意图中tag如下,理论上只要参数完全一致,就可以复现出一样的画(仅些微细节不同)

Stable Diffusion checkpoint:NovelAILeaks 4chan[925997e9]

prompt:

(((masterpiece))),((best quality)), flat chest,((loli)),((one girl)),very long hair,light white hair, red eyes,aqua eyes,white robe, cat ears,(flower hairpin),sunlight, light smile,beautiful detailed blue necklace,see-through,

Negative prompt: ((part of the head)), ((((mutated hands and fingers)))), deformed, blurry, bad anatomy, disfigured, poorly drawn face, mutation, mutated, extra limb, ugly, poorly drawn hands, missing limb, blurry, floating limbs, disconnected limbs, malformed hands, blur, out of focus, long neck, long body, Octane renderer,lowres, bad anatomy, bad hands, text, missing fingers, worst quality, low quality, normal quality, signature, watermark, blurry,ugly, fat, obese, chubby, (((deformed))), [blurry], bad anatomy, disfigured, poorly drawn face, mutation, mutated, (extra_limb), (ugly), (poorly drawn hands), messy drawing,(2girls), morbid, mutilated, tranny, trans, trannsexual, [out of frame], (bad proportions), octane render, unity, unreal, maya, photorealistic

Steps: 27, S

ampler: Euler a,

CFG scale: 7,

Seed: 2413789891,

Size: 1024x768,

Model hash: 925997e9,

Clip skip: 2

-

如果你想了解AI绘画的原理,这里有一篇不错的文章:学习AI绘画,从Diffusion和CLIP开始

-

如果你自身硬件性能不足,想要购买租用GPU机器,可以看一下这篇文章的这部分《常见云 GPU 介绍》

smok

2022-12-16 23:23

大佬请帮忙看一下吧,试了各家的方法都是最后按任意键退出,真的快放弃了

stderr: ERROR: Exception:

Traceback (most recent call last):

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\urllib3\response.py”, line 435, in _error_catcher

yield

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\urllib3\response.py”, line 516, in read

data = self._fp.read(amt) if not fp_closed else b””

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\cachecontrol\filewrapper.py”, line 90, in read

data = self.__fp.read(amt)

File “C:\Users\SMOK\AppData\Local\Programs\Python\Python310\lib\http\client.py”, line 465, in read

s = self.fp.read(amt)

File “C:\Users\SMOK\AppData\Local\Programs\Python\Python310\lib\socket.py”, line 705, in readinto

return self._sock.recv_into(b)

File “C:\Users\SMOK\AppData\Local\Programs\Python\Python310\lib\ssl.py”, line 1274, in recv_into

return self.read(nbytes, buffer)

File “C:\Users\SMOK\AppData\Local\Programs\Python\Python310\lib\ssl.py”, line 1130, in read

return self._sslobj.read(len, buffer)

TimeoutError: The read operation timed out

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\cli\base_command.py”, line 167, in exc_logging_wrapper

status = run_func(*args)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\cli\req_command.py”, line 247, in wrapper

return func(self, options, args)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\commands\install.py”, line 369, in run

requirement_set = resolver.resolve(

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\resolver.py”, line 92, in resolve

result = self._result = resolver.resolve(

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\resolvelib\resolvers.py”, line 481, in resolve

state = resolution.resolve(requirements, max_rounds=max_rounds)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\resolvelib\resolvers.py”, line 348, in resolve

self._add_to_criteria(self.state.criteria, r, parent=None)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\resolvelib\resolvers.py”, line 172, in _add_to_criteria

if not criterion.candidates:

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\resolvelib\structs.py”, line 151, in bool

return bool(self._sequence)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\found_candidates.py”, line 155, in bool

return any(self)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\found_candidates.py”, line 143, in

return (c for c in iterator if id(c) not in self._incompatible_ids)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\found_candidates.py”, line 47, in _iter_built

candidate = func()

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\factory.py”, line 206, in _make_candidate_from_link

self._link_candidate_cache[link] = LinkCandidate(

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\candidates.py”, line 297, in init

super().init(

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\candidates.py”, line 162, in init

self.dist = self._prepare()

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\candidates.py”, line 231, in _prepare

dist = self._prepare_distribution()

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\resolution\resolvelib\candidates.py”, line 308, in _prepare_distribution

return preparer.prepare_linked_requirement(self._ireq, parallel_builds=True)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\operations\prepare.py”, line 438, in prepare_linked_requirement

return self._prepare_linked_requirement(req, parallel_builds)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\operations\prepare.py”, line 483, in _prepare_linked_requirement

local_file = unpack_url(

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\operations\prepare.py”, line 165, in unpack_url

file = get_http_url(

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\operations\prepare.py”, line 106, in get_http_url

from_path, content_type = download(link, temp_dir.path)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\network\download.py”, line 147, in call

for chunk in chunks:

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\cli\progress_bars.py”, line 53, in _rich_progress_bar

for chunk in iterable:

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_internal\network\utils.py”, line 63, in response_chunks

for chunk in response.raw.stream(

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\urllib3\response.py”, line 573, in stream

data = self.read(amt=amt, decode_content=decode_content)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\urllib3\response.py”, line 509, in read

with self._error_catcher():

File “C:\Users\SMOK\AppData\Local\Programs\Python\Python310\lib\contextlib.py”, line 153, in exit

self.gen.throw(typ, value, traceback)

File “D:\stable-diffusion-webui\venv\lib\site-packages\pip_vendor\urllib3\response.py”, line 440, in _error_catcher

raise ReadTimeoutError(self._pool, None, “Read timed out.”)

pip._vendor.urllib3.exceptions.ReadTimeoutError: HTTPSConnectionPool(host=’download.pytorch.org’, port=443): Read timed out.

[notice] A new release of pip available: 22.2.1 -> 22.3.1

[notice] To update, run: D:\stable-diffusion-webui\venv\Scripts\python.exe -m pip install –upgrade pip

请按任意键继续. . .

yige

2022-12-16 11:26

OSError: Can’t load tokenizer for ‘openai/clip-vit-large-patch14’. If you were trying to load it from ‘https://huggingface.co/models’, make sure you don’t have a local directory with the same name. Otherwise, make sure ‘openai/clip-vit-large-patch14’ is the correct path to a directory containing all relevant files for a CLIPTokenizer tokenizer.

去年夏天

2022-12-16 11:34

简单说就是他无法从那个网址下载资源,请检查网络,可能需要给git和python设置代理

Ralts

2022-12-14 15:35

venv “D:\AI-draw\stable-diffusion-webui\venv\Scripts\Python.exe”

Python 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)]

Commit hash: 685f9631b56ff8bd43bce24ff5ce0f9a0e9af490

Installing clip

Traceback (most recent call last):

File “D:\AI-draw\stable-diffusion-webui\launch.py”, line 294, in

prepare_environment()

File “D:\AI-draw\stable-diffusion-webui\launch.py”, line 215, in prepare_environment

run_pip(f”install {clip_package}”, “clip”)

File “D:\AI-draw\stable-diffusion-webui\launch.py”, line 78, in run_pip

return run(f'”{python}” -m pip {args} –prefer-binary{index_url_line}’, desc=f”Installing {desc}”, errdesc=f”Couldn’t install {desc}”)

File “D:\AI-draw\stable-diffusion-webui\launch.py”, line 49, in run

raise RuntimeError(message)

RuntimeError: Couldn’t install clip.

Command: “D:\AI-draw\stable-diffusion-webui\venv\Scripts\python.exe” -m pip install git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1 –prefer-binary

Error code: 1

stdout: Looking in indexes: https://pypi.tuna.tsinghua.edu.cn/simple, , …

Collecting git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1

Cloning https://github.com/openai/CLIP.git (to revision d50d76daa670286dd6cacf3bcd80b5e4823fc8e1) to c:\users\lenovo\appdata\local\temp\pip-req-build-hhn14o

stderr: WARNING: The index url “” seems invalid, please provide a scheme.

WARNING: The index url “…” seems invalid, please provide a scheme.

Running command git clone –filter=blob:none –quiet https://github.com/openai/CLIP.git ‘C:\Users\lenovo\AppData\Local\Temp\pip-req-build-hhn14o‘

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21109 ms: Couldn’t connect to server

error: subprocess-exited-with-error

git clone –filter=blob:none –quiet https://github.com/openai/CLIP.git ‘C:\Users\lenovo\AppData\Local\Temp\pip-req-build-hhn14o‘ did not run successfully.

exit code: 128

See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error

git clone –filter=blob:none –quiet https://github.com/openai/CLIP.git ‘C:\Users\lenovo\AppData\Local\Temp\pip-req-build-hhn14o‘ did not run successfully.

exit code: 128

See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

请按任意键继续. . .

去年夏天

2022-12-14 15:39

网络问题,给git和python挂代理

1、代理工具开TUN模式

2、git和python里面手动设置一下代理

3、使用Proxifier之类的软件给git和python增加代理。

sky

2022-12-14 15:33

venv “E:\AI\stable-diffusion-webui\venv\Scripts\Python.exe”

Python 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)]

Commit hash: 685f9631b56ff8bd43bce24ff5ce0f9a0e9af490

Installing gfpgan

Traceback (most recent call last):

File “E:\AI\stable-diffusion-webui\launch.py”, line 294, in

prepare_environment()

File “E:\AI\stable-diffusion-webui\launch.py”, line 212, in prepare_environment

run_pip(f”install {gfpgan_package}”, “gfpgan”)

File “E:\AI\stable-diffusion-webui\launch.py”, line 78, in run_pip

return run(f'”{python}” -m pip {args} –prefer-binary{index_url_line}’, desc=f”Installing {desc}”, errdesc=f”Couldn’t install {desc}”)

File “E:\AI\stable-diffusion-webui\launch.py”, line 49, in run

raise RuntimeError(message)

RuntimeError: Couldn’t install gfpgan.

Command: “E:\AI\stable-diffusion-webui\venv\Scripts\python.exe” -m pip install git+https://github.com/TencentARC/GFPGAN.git@8d2447a2d918f8eba5a4a01463fd48e45126a379 –prefer-binary

Error code: 1

stdout: Collecting git+https://github.com/TencentARC/GFPGAN.git@8d2447a2d918f8eba5a4a01463fd48e45126a379

Cloning https://github.com/TencentARC/GFPGAN.git (to revision 8d2447a2d918f8eba5a4a01463fd48e45126a379) to c:\users\83805\appdata\local\temp\pip-req-build-kveh1dad

stderr: Running command git clone –filter=blob:none –quiet https://github.com/TencentARC/GFPGAN.git ‘C:\Users\83805\AppData\Local\Temp\pip-req-build-kveh1dad’

fatal: unable to access ‘https://github.com/TencentARC/GFPGAN.git/’: OpenSSL SSL_read: Connection was reset, errno 10054

fatal: could not fetch fa702eeacff13fe8475b0e102a8b8c37602f3963 from promisor remote

warning: Clone succeeded, but checkout failed.

You can inspect what was checked out with ‘git status’

and retry with ‘git restore –source=HEAD :/’

error: subprocess-exited-with-error

git clone –filter=blob:none –quiet https://github.com/TencentARC/GFPGAN.git ‘C:\Users\83805\AppData\Local\Temp\pip-req-build-kveh1dad’ did not run successfully.

exit code: 128

See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error

git clone –filter=blob:none –quiet https://github.com/TencentARC/GFPGAN.git ‘C:\Users\83805\AppData\Local\Temp\pip-req-build-kveh1dad’ did not run successfully.

exit code: 128

See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

[notice] A new release of pip available: 22.2.1 -> 22.3.1

[notice] To update, run: E:\AI\stable-diffusion-webui\venv\Scripts\python.exe -m pip install –upgrade pip

这是什么情况呢

去年夏天

2022-12-14 15:41

网络问题,给git和python挂代理

1、代理工具开TUN模式

2、git和python里面手动设置一下代理

3、使用Proxifier之类的软件给git和python增加代理。

Blancho

2022-12-09 10:46

在下载NovelAILeaks这一步里似乎没有找到/naifu/modules/这个目录?

去年夏天

2022-12-09 14:54

找找类似的目录,上游端可能改了文件。

Blancho

2022-12-10 15:28

Stop At last layers of CLIP model没在Setting里找到,换3.10.6重新部署了一遍还是没有

去年夏天

2022-12-11 10:25

现在那里写成了《setting CLIP stop at last layers》

HHB

2022-12-03 04:57

Traceback (most recent call last):

File “G:\AI\stable-diffusion-webui\launch.py”, line 293, in

prepare_enviroment()

File “G:\AI\stable-diffusion-webui\launch.py”, line 214, in prepare_enviroment

run_pip(f”install {clip_package}”, “clip”)

File “G:\AI\stable-diffusion-webui\launch.py”, line 78, in run_pip

return run(f'”{python}” -m pip {args} –prefer-binary{index_url_line}’, desc=f”Installing {desc}”, errdesc=f”Couldn’t install {desc}”)

File “G:\AI\stable-diffusion-webui\launch.py”, line 49, in run

raise RuntimeError(message)

RuntimeError: Couldn’t install clip.

Command: “G:\AI\stable-diffusion-webui\HHB\Scripts\python3.exe” -m pip install git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1 –prefer-binary

Error code: 1

stdout: Looking in indexes: https://pypi.org/simple/

Collecting git+https://github.com/openai/CLIP.git@d50d76daa670286dd6cacf3bcd80b5e4823fc8e1

Cloning https://github.com/openai/CLIP.git (to revision d50d76daa670286dd6cacf3bcd80b5e4823fc8e1) to c:\users\administrator\appdata\local\temp\pip-req-build-sb2rkfwf

stderr: Running command git clone –filter=blob:none –quiet https://github.com/openai/CLIP.git ‘C:\Users\Administrator\AppData\Local\Temp\pip-req-build-sb2rkfwf’

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: OpenSSL SSL_read: Connection was reset, errno 10054

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21081 ms: Timed out

error: unable to read sha1 file of .github/workflows/test.yml (570a54198bb0d012a585c3999d5281c07cd339e9)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21068 ms: Timed out

error: unable to read sha1 file of CLIP.png (a1b5ec9171fd7a51e36e845a02304eb837142ba1)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21045 ms: Timed out

error: unable to read sha1 file of MANIFEST.in (effd8d995ff1842a48c69d2a0f7c8dce4423d7a2)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21099 ms: Timed out

error: unable to read sha1 file of README.md (abc8a347e095c11c31b6a42a117f21630fa52bda)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21091 ms: Timed out

error: unable to read sha1 file of clip/clip.py (257511e1d40c120e0d64a0f1562d44b2b8a40a17)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21061 ms: Timed out

error: unable to read sha1 file of clip/model.py (232b7792eb97440642547bd462cf128df9243933)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21074 ms: Timed out

error: unable to read sha1 file of clip/simple_tokenizer.py (0a66286b7d5019c6e221932a813768038f839c91)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21074 ms: Timed out

error: unable to read sha1 file of data/yfcc100m.md (06083ef9a613b5d360e87c3f395c2a16c6e9208e)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21048 ms: Timed out

error: unable to read sha1 file of notebooks/Interacting_with_CLIP.ipynb (1043f2389d3aa8dde666036ce7221bea2cce6754)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21070 ms: Timed out

error: unable to read sha1 file of notebooks/Prompt_Engineering_for_ImageNet.ipynb (dba7fb654f4bed7adff1da1e7d4297670ea51469)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21090 ms: Timed out

error: unable to read sha1 file of requirements.txt (6b98c33f3a0e09ddf982606430472de3061c6e9f)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: OpenSSL SSL_read: Connection was reset, errno 10054

error: unable to read sha1 file of setup.py (c9ea7d0d2f3d2fcf66d6f6e2aa0eb1a97a524bb6)

fatal: unable to access ‘https://github.com/openai/CLIP.git/’: Failed to connect to github.com port 443 after 21066 ms: Timed out

error: unable to read sha1 file of tests/test_consistency.py (f2c6fd4fe9074143803e0eb6c99fa02a47632094)

fatal: unable to checkout working tree

warning: Clone succeeded, but checkout failed.

You can inspect what was checked out with ‘git status’

and retry with ‘git restore –source=HEAD :/’

error: subprocess-exited-with-error

git clone –filter=blob:none –quiet https://github.com/openai/CLIP.git ‘C:\Users\Administrator\AppData\Local\Temp\pip-req-build-sb2rkfwf’ did not run successfully.

exit code: 128

See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error

git clone –filter=blob:none –quiet https://github.com/openai/CLIP.git ‘C:\Users\Administrator\AppData\Local\Temp\pip-req-build-sb2rkfwf’ did not run successfully.

exit code: 128

See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

这是什么原因,网络吗

去年夏天

2022-12-03 17:42

全是超时,应该是网络问题

ovo

2022-12-01 14:54

venv “C:\AI\AI\sd web ui\stable-diffusion-webui\venv\Scripts\Python.exe”

Python 3.10.8 (tags/v3.10.8:aaaf517, Oct 11 2022, 16:50:30) [MSC v.1933 64 bit (AMD64)]

Commit hash: 4b3c5bc24bffdf429c463a465763b3077fe55eb8

Installing torch and torchvision

Traceback (most recent call last):

File “C:\AI\AI\sd web ui\stable-diffusion-webui\launch.py”, line 293, in

prepare_enviroment()

File “C:\AI\AI\sd web ui\stable-diffusion-webui\launch.py”, line 205, in prepare_enviroment

run(f'”{python}” -m {torch_command}’, “Installing torch and torchvision”, “Couldn’t install torch”)

File “C:\AI\AI\sd web ui\stable-diffusion-webui\launch.py”, line 49, in run

raise RuntimeError(message)

RuntimeError: Couldn’t install torch.

Command: “C:\AI\AI\sd web ui\stable-diffusion-webui\venv\Scripts\python.exe” -m pip install torch1.12.1+cu113 torchvision0.13.1+cu113 –extra-index-url https://download.pytorch.org/whl/cu113

Error code: 1

stdout:

stderr: C:\AI\AI\sd web ui\stable-diffusion-webui\venv\Scripts\python.exe: No module named pip

尼米兹1234

2022-11-21 21:09

Error verifying pickled file from C:\Users\z234c/.cache\huggingface\transformers\c506559a5367a918bab46c39c79af91ab88846b49c8abd9d09e699ae067505c6.6365d436cc844f2f2b4885629b559d8ff0938ac484c01a6796538b2665de96c7:

Traceback (most recent call last):

File “C:\Users\z234c\stable-diffusion-webui\modules\safe.py”, line 83, in check_pt

with zipfile.ZipFile(filename) as z:

File “C:\Users\z234c\AppData\Local\Programs\Python\Python310\lib\zipfile.py”, line 1267, in init

self._RealGetContents()

File “C:\Users\z234c\AppData\Local\Programs\Python\Python310\lib\zipfile.py”, line 1334, in _RealGetContents

raise BadZipFile(“File is not a zip file”)

zipfile.BadZipFile: File is not a zip file

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File “C:\Users\z234c\stable-diffusion-webui\modules\safe.py”, line 131, in load_with_extra

check_pt(filename, extra_handler)

File “C:\Users\z234c\stable-diffusion-webui\modules\safe.py”, line 98, in check_pt

unpickler.load()

_pickle.UnpicklingError: persistent IDs in protocol 0 must be ASCII strings

—–> !!!! The file is most likely corrupted !!!! <—–

You can skip this check with –disable-safe-unpickle commandline argument, but that is not going to help you.

Traceback (most recent call last):

File “C:\Users\z234c\stable-diffusion-webui\launch.py”, line 251, in

start()

File “C:\Users\z234c\stable-diffusion-webui\launch.py”, line 246, in start

webui.webui()

File “C:\Users\z234c\stable-diffusion-webui\webui.py”, line 150, in webui

initialize()

File “C:\Users\z234c\stable-diffusion-webui\webui.py”, line 85, in initialize

modules.sd_models.load_model()

File “C:\Users\z234c\stable-diffusion-webui\modules\sd_models.py”, line 247, in load_model

sd_model = instantiate_from_config(sd_config.model)

File “C:\Users\z234c\stable-diffusion-webui\repositories\stable-diffusion\ldm\util.py”, line 85, in instantiate_from_config

return get_obj_from_str(config[“target”])(config.get(“params”, dict()))

File “C:\Users\z234c\stable-diffusion-webui\repositories\stable-diffusion\ldm\models\diffusion\ddpm.py”, line 461, in __init__

self.instantiate_cond_stage(cond_stage_config)

File “C:\Users\z234c\stable-diffusion-webui\repositories\stable-diffusion\ldm\models\diffusion\ddpm.py”, line 519, in instantiate_cond_stage

model = instantiate_from_config(config)

File “C:\Users\z234c\stable-diffusion-webui\repositories\stable-diffusion\ldm\util.py”, line 85, in instantiate_from_config

return get_obj_from_str(config[“target”])(config.get(“params”, dict()))

File “C:\Users\z234c\stable-diffusion-webui\repositories\stable-diffusion\ldm\modules\encoders\modules.py”, line 142, in init

self.transformer = CLIPTextModel.from_pretrained(version)

File “C:\Users\z234c\stable-diffusion-webui\venv\lib\site-packages\transformers\modeling_utils.py”, line 2006, in from_pretrained

loaded_state_dict_keys = [k for k in state_dict.keys()]

AttributeError: ‘NoneType’ object has no attribute ‘keys’

这个是啥意思

wyk

2022-11-20 20:59

venv “D:\navai\novelai-webui一键包\novelai-webui-aki 3\venv\Scripts\Python.exe”

Python 3.10.8 (tags/v3.10.8:aaaf517, Oct 11 2022, 16:50:30) [MSC v.1933 64 bit (AMD64)]

Commit hash: 905304243cf121bfa87aac44a1290607ea2aa50e

Installing gfpgan

Traceback (most recent call last):

File “D:\navai\novelai-webui一键包\novelai-webui-aki 3\launch.py”, line 255, in

prepare_enviroment()

File “D:\navai\novelai-webui一键包\novelai-webui-aki 3\launch.py”, line 179, in prepare_enviroment

run_pip(f”install {gfpgan_package}”, “gfpgan”)

File “D:\navai\novelai-webui一键包\novelai-webui-aki 3\launch.py”, line 63, in run_pip

return run(f'”{python}” -m pip {args} –prefer-binary{index_url_line}’, desc=f”Installing {desc}”, errdesc=f”Couldn’t install {desc}”)

File “D:\navai\novelai-webui一键包\novelai-webui-aki 3\launch.py”, line 34, in run

raise RuntimeError(message)

RuntimeError: Couldn’t install gfpgan.

Command: “D:\navai\novelai-webui一键包\novelai-webui-aki 3\venv\Scripts\python.exe” -m pip install git+https://github.com/TencentARC/GFPGAN.git@8d2447a2d918f8eba5a4a01463fd48e45126a379 –prefer-binary

Error code: 1

stdout: Collecting git+https://github.com/TencentARC/GFPGAN.git@8d2447a2d918f8eba5a4a01463fd48e45126a379

Cloning https://github.com/TencentARC/GFPGAN.git (to revision 8d2447a2d918f8eba5a4a01463fd48e45126a379) to c:\users\0000\appdata\local\temp\pip-req-build-y526xf4j

stderr: Running command git clone –filter=blob:none –quiet https://github.com/TencentARC/GFPGAN.git ‘C:\Users\0000\AppData\Local\Temp\pip-req-build-y526xf4j’

Running command git rev-parse -q –verify ‘sha^8d2447a2d918f8eba5a4a01463fd48e45126a379’

Running command git fetch -q https://github.com/TencentARC/GFPGAN.git 8d2447a2d918f8eba5a4a01463fd48e45126a379

fatal: unable to access ‘https://github.com/TencentARC/GFPGAN.git/’: Failed to connect to github.com port 443 after 21065 ms: Timed out

error: subprocess-exited-with-error

大佬这是怎么回事,感谢大佬

去年夏天

2022-11-21 10:23

从提示来看,就是下载超时,给git挂代理试试?

Tiny

2022-11-13 16:15

这是为什么

venv “F:\AI-windows-WebUI\stable-diffusion-webui\venv\Scripts\Python.exe”

Python 3.10.8 (tags/v3.10.8:aaaf517, Oct 11 2022, 16:50:30) [MSC v.1933 64 bit (AMD64)]

Commit hash: 98947d173e3f1667eba29c904f681047dea9de90

Installing requirements for Web UI

Launching Web UI with arguments:

Loading config from: F:\AI-windows-WebUI\stable-diffusion-webui\models\Stable-diffusion\Naifu-Leaks.yaml

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

making attention of type ‘vanilla’ with 512 in_channels

Working with z of shape (1, 4, 64, 64) = 16384 dimensions.

making attention of type ‘vanilla’ with 512 in_channels

Traceback (most recent call last):

File “F:\AI-windows-WebUI\stable-diffusion-webui\launch.py”, line 256, in

start()

File “F:\AI-windows-WebUI\stable-diffusion-webui\launch.py”, line 251, in start

webui.webui()

File “F:\AI-windows-WebUI\stable-diffusion-webui\webui.py”, line 146, in webui

initialize()

File “F:\AI-windows-WebUI\stable-diffusion-webui\webui.py”, line 82, in initialize

modules.sd_models.load_model()

File “F:\AI-windows-WebUI\stable-diffusion-webui\modules\sd_models.py”, line 253, in load_model

sd_model = instantiate_from_config(sd_config.model)

File “F:\AI-windows-WebUI\stable-diffusion-webui\repositories\stable-diffusion\ldm\util.py”, line 85, in instantiate_from_config

return get_obj_from_str(config[“target”])(config.get(“params”, dict()))

File “F:\AI-windows-WebUI\stable-diffusion-webui\repositories\stable-diffusion\ldm\models\diffusion\ddpm.py”, line 461, in __init__

self.instantiate_cond_stage(cond_stage_config)

File “F:\AI-windows-WebUI\stable-diffusion-webui\repositories\stable-diffusion\ldm\models\diffusion\ddpm.py”, line 519, in instantiate_cond_stage

model = instantiate_from_config(config)

File “F:\AI-windows-WebUI\stable-diffusion-webui\repositories\stable-diffusion\ldm\util.py”, line 85, in instantiate_from_config

return get_obj_from_str(config[“target”])(config.get(“params”, dict()))

File “F:\AI-windows-WebUI\stable-diffusion-webui\repositories\stable-diffusion\ldm\modules\encoders\modules.py”, line 141, in init

self.tokenizer = CLIPTokenizer.from_pretrained(version)

File “F:\AI-windows-WebUI\stable-diffusion-webui\venv\lib\site-packages\transformers\tokenization_utils_base.py”, line 1768, in from_pretrained

raise EnvironmentError(

OSError: Can’t load tokenizer for ‘openai/clip-vit-large-patch14’. If you were trying to load it from ‘https://huggingface.co/models’, make sure you don’t have a local directory with the same name. Otherwise, make sure ‘openai/clip-vit-large-patch14’ is the correct path to a directory containing all relevant files for a CLIPTokenizer tokenizer.

请按任意键继续. . .

去年夏天

2022-11-21 10:22

模型文件ckpt文件是不是损坏了?

𝑨𝑵𝑳𝑰𝑵.STUDIO

2022-11-12 14:11

现在我打开webui-user.bat后每次都冒出CUDA out of memory说我显存可用0字节,然后就报错了,但是任务管理器上的GPU0%,nvidia-smi后占用161MiB / 2048MiB,这是怎么回事?求解!谢谢:

Python 3.10.5 (tags/v3.10.5:f377153, Jun 6 2022, 16:14:13) [MSC v.1929 64 bit (AMD64)]

Commit hash: 7ba3923d5b494b7756d0b12f33acb3716d830b9a

Installing requirements for Web UI

Launching Web UI with arguments:

Loading config from: H:\stable-diffusion-webui\models\Stable-diffusion\Naifu-Leaks- 4chan.yaml

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

making attention of type ‘vanilla’ with 512 in_channels

Working with z of shape (1, 4, 64, 64) = 16384 dimensions.

making attention of type ‘vanilla’ with 512 in_channels

Loading weights [925997e9] from H:\stable-diffusion-webui\models\Stable-diffusion\Naifu-Leaks- 4chan.ckpt

Traceback (most recent call last):

File “H:\stable-diffusion-webui\launch.py”, line 252, in

start()

File “H:\stable-diffusion-webui\launch.py”, line 247, in start

webui.webui()

File “H:\stable-diffusion-webui\webui.py”, line 146, in webui

initialize()

File “H:\stable-diffusion-webui\webui.py”, line 82, in initialize

modules.sd_models.load_model()

File “H:\stable-diffusion-webui\modules\sd_models.py”, line 259, in load_model

sd_model.to(shared.device)

File “H:\stable-diffusion-webui\venv\lib\site-packages\pytorch_lightning\core\mixins\device_dtype_mixin.py”, line 113, in to

return super().to(*args, **kwargs)

File “H:\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py”, line 927, in to

return self._apply(convert)

File “H:\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py”, line 579, in _apply

module._apply(fn)

File “H:\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py”, line 579, in _apply

module._apply(fn)

File “H:\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py”, line 579, in _apply

module._apply(fn)

[Previous line repeated 2 more times]

File “H:\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py”, line 602, in _apply

param_applied = fn(param)

File “H:\stable-diffusion-webui\venv\lib\site-packages\torch\nn\modules\module.py”, line 925, in convert

return t.to(device, dtype if t.is_floating_point() or t.is_complex() else None, non_blocking)

RuntimeError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 2.00 GiB total capacity; 1.68 GiB already allocated; 0 bytes free; 1.72 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

去年夏天

2022-11-13 11:06

不太好说,把python换成3.10.6试试,我看你用的是3.10.5

xita

2022-11-06 14:28

RuntimeError: Sizes of tensors must match except in dimension 1. Expected size 136 but got size 135 for tensor number 1 in the list.

去年夏天

2022-11-07 22:05

你是不是设置了比较怪的比例,尽量尝试1:1,4:3之类的常见图片比例

xita

2022-11-05 10:54

launch.py: error: unrecognized arguments: –鈥損recision full 鈥?no-half

xita

2022-11-05 11:56

launch.py: error: unrecognized arguments: -precision full -no-half

去年夏天

2022-11-05 12:05

--precision full --no-half需要写两个

-xita

2022-11-05 19:20

RuntimeError: CUDA out of memory. Tried to allocate 120.00 MiB (GPU 0; 4.00 GiB total capacity; 3.30 GiB already allocated; 0 bytes free; 3.35 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

xita

2022-11-05 19:23

已解决

python偷吃我显存

去年夏天

2022-11-06 13:48

显存不够用了

oga

2022-11-02 13:44

venv “D:\NOVELAI\stable-diffusion-webui\venv\Scripts\Python.exe”

Python 3.11.0 (main, Oct 24 2022, 18:26:48) [MSC v.1933 64 bit (AMD64)]

Commit hash: 65522ff157e4be4095a99421da04ecb0749824ac

Installing torch and torchvision

Traceback (most recent call last):

File “D:\NOVELAI\stable-diffusion-webui\launch.py”, line 248, in

prepare_enviroment()

File “D:\NOVELAI\stable-diffusion-webui\launch.py”, line 169, in prepare_enviroment

run(f'”{python}” -m {torch_command}’, “Installing torch and torchvision”, “Couldn’t install torch”)

File “D:\NOVELAI\stable-diffusion-webui\launch.py”, line 34, in run

raise RuntimeError(message)

RuntimeError: Couldn’t install torch.

Command: “D:\NOVELAI\stable-diffusion-webui\venv\Scripts\python.exe” -m pip install torch1.12.1+cu113 torchvision0.13.1+cu113 –extra-index-url https://download.pytorch.org/whl/cu113

Error code: 1

stdout: Looking in indexes: https://pypi.org/simple, https://download.pytorch.org/whl/cu113

stderr: ERROR: Could not find a version that satisfies the requirement torch1.12.1+cu113 (from versions: none)

ERROR: No matching distribution found for torch1.12.1+cu113

去年夏天

2022-11-02 14:09

首先,请安装文章内指定版本的python,程序必须在3.10.6版本的python下才能运行,有可能小版本号错一位都会出错的。

然后,删掉\stable-diffusion-webui\venv\文件夹,给pip,git,python配置上代理,然后重新运行

webui-user.bat试试。尼米兹1234

2022-11-21 17:12

我和他遇到了一个问题,挂了梯子也不行咋办啊

Blancho

2022-12-09 10:43

我也遇到了同样的问题,排查后发现源里这个包还没有支持python3.11的,降级到3.10就行了

oga

2022-11-02 13:01

Couldn’t launch python

exit code: 9009

stderr:

‘python’ 不是内部或外部命令,也不是可运行的程序

或批处理文件。

Launch unsuccessful. Exiting.

请按任意键继续. . .

去年夏天

2022-11-02 14:07

安装的时候勾选

Add Python to PATH选项allant

2022-10-31 16:56

看到过最详细的教程了,感谢

请教下博主 naifu 的两个模型有什么区别?Naifu Leaks 4chan 和 Naifu Leaks animefull-latest

去年夏天

2022-10-31 17:52

就是如表格中所说

Naifu 7GB latest更适合训练用,出图用4GB那个基本版就足够了。

allant

2022-11-01 18:37

感谢~

liangddyy

2022-10-29 09:56

感谢分享。非常的详细。

大同小异

2022-10-24 21:15

运行webui-user.bat 一直报这个错,怎么办?挂了VPN也没用

RuntimeError: Couldn’t install gfpgan.

Command: “D:\stable-diffusion-webui\venv\Scripts\python.exe” -m pip install git+https://github.com/TencentARC/GFPGAN.git@8d2447a2d918f8eba5a4a01463fd48e45126a379 –prefer-binary

Error code: 1

去年夏天

2022-10-24 21:21

你是不是有一次安装失败了?彻底删除整个stable-diffusion-webui文件夹,重新来一遍

斋

2022-10-29 12:38

你可以试试看不管提示,多次运行,这个可能网速过慢导致的,或者是用电信网,我也是提示这个,一直重复运行大概两个半钟头就好了

cori

2022-10-21 16:36

感谢讲解

joey

2022-10-20 23:24

thank you.